Share the latest Microsoft other Certification DP-200 exam dumps, 13 Exam Practice topics and test your strength. Pass4itsure offers complete Microsoft other Certification DP-200 exam questions and answers. If you want to pass the exam easily, you can choose Pass4itsure. If you are interested in hobbies. We shared the latest DP-200 PDF you can download online!

Download Microsoft other Certification DP-200 PDF Online

[PDF] Free Microsoft DP-200 pdf dumps download from Google Drive: https://drive.google.com/open?id=1lJNE54_9AAyU9kzPI_8NR-PPFqNYM7ys

[PDF] Free Full Microsoft pdf dumps download from Google Drive: https://drive.google.com/open?id=1gdQrKIsiLyDEsZ24FxsyukNPYmpSUDDO

Valid information provided by Cisco officials

Exam DP-200: Implementing an Azure Data Solution (beta) – Microsoft: https://www.microsoft.com/en-us/learning/exam-dp-200.aspx

Latest effective Cisco DP-200 Exam Practice Tests

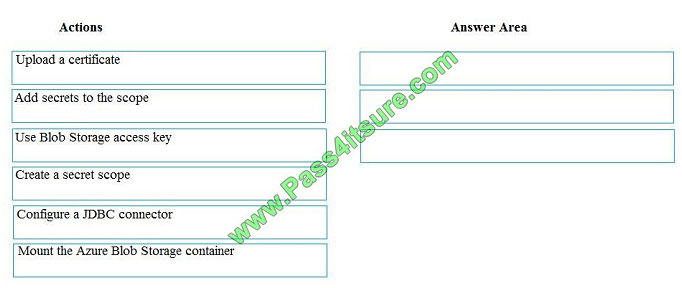

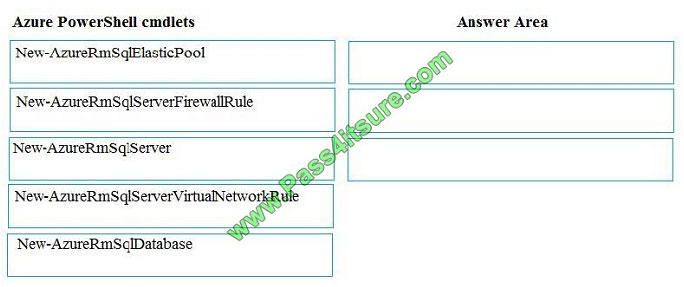

QUESTION 1

You manage the Microsoft Azure Databricks environment for a company. You must be able to access a private Azure

Blob Storage account. Data must be available to all Azure Databricks workspaces. You need to provide the data

access.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions

to the answer area and arrange them in the correct order.

Select and Place: Correct Answer:

Correct Answer:  Step 1: Create a secret scope

Step 1: Create a secret scope

Step 2: Add secrets to the scope

Note: dbutils.secrets.get(scope = “”, key = “”) gets the key that has been stored as a secret in a secret scope.

Step 3: Mount the Azure Blob Storage container

You can mount a Blob Storage container or a folder inside a container through Databricks File System – DBFS. The

mount is a pointer to a Blob Storage container, so the data is never synced locally.

Note: To mount a Blob Storage container or a folder inside a container, use the following command:

Python

dbutils.fs.mount(

source = “wasbs://@.blob.core.windows.net”,

mount_point = “/mnt/”,

extra_configs = {“”:dbutils.secrets.get(scope = “”, key = “”)})

where:

dbutils.secrets.get(scope = “”, key = “”) gets the key that has been stored as a secret in a secret scope.

References:

https://docs.databricks.com/spark/latest/data-sources/azure/azure-storage.html

QUESTION 2

You develop data engineering solutions for a company. The company has on-premises Microsoft SQL Server databases

at multiple locations.

The company must integrate data with Microsoft Power BI and Microsoft Azure Logic Apps. The solution must avoid

single points of failure during connection and transfer to the cloud. The solution must also minimize latency.

You need to secure the transfer of data between on-premises databases and Microsoft Azure.

What should you do?

A. Install a standalone on-premises Azure data gateway at each location

B. Install an on-premises data gateway in personal mode at each location

C. Install an Azure on-premises data gateway at the primary location

D. Install an Azure on-premises data gateway as a cluster at each location

Correct Answer: D

You can create high availability clusters of On-premises data gateway installations, to ensure your organization can

access on-premises data resources used in Power BI reports and dashboards. Such clusters allow gateway

administrators to group gateways to avoid single points of failure in accessing on-premises data resources. The Power

BI service always uses the primary gateway in the cluster, unless it\\’s not available. In that case, the service switches to

the next gateway in the cluster, and so on.

References: https://docs.microsoft.com/en-us/power-bi/service-gateway-high-availability-clusters

QUESTION 3

You manage a solution that uses Azure HDInsight clusters.

You need to implement a solution to monitor cluster performance and status.

Which technology should you use?

A. Azure HDInsight .NET SDK

B. Azure HDInsight REST API

C. Ambari REST API

D. Azure Log Analytics

E. Ambari Web UI

Correct Answer: E

Ambari is the recommended tool for monitoring utilization across the whole cluster. The Ambari dashboard shows easily

glanceable widgets that display metrics such as CPU, network, YARN memory, and HDFS disk usage. The specific

metrics shown depend on cluster type. The “Hosts” tab shows metrics for individual nodes so you can ensure the load

on your cluster is evenly distributed.

The Apache Ambari project is aimed at making Hadoop management simpler by developing software for provisioning,

managing, and monitoring Apache Hadoop clusters. Ambari provides an intuitive, easy-to-use Hadoop management

web UI backed by its RESTful APIs.

References: https://azure.microsoft.com/en-us/blog/monitoring-on-hdinsight-part-1-an-overview/

https://ambari.apache.org/

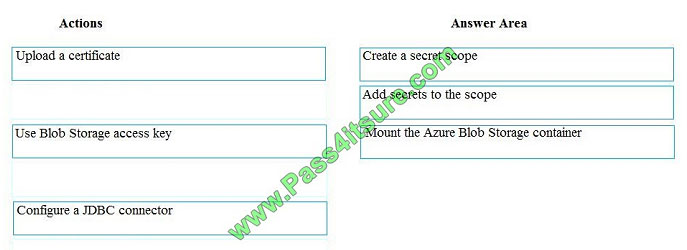

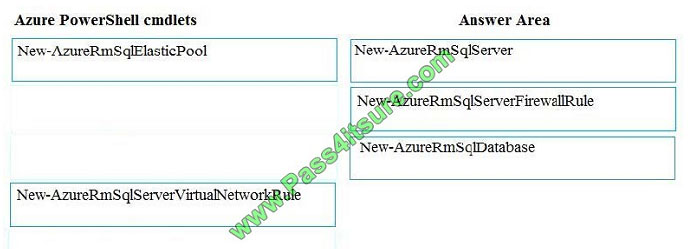

QUESTION 4

You plan to create a new single database instance of Microsoft Azure SQL Database.

The database must only allow communication from the data engineer\\’s workstation. You must connect directly to the

instance by using Microsoft SQL Server Management Studio.

You need to create and configure the Database. Which three Azure PowerShell cmdlets should you use to develop the

solution? To answer, move the appropriate cmdlets from the list of cmdlets to the answer area and arrange them in the

correct order.

Select and Place: Correct Answer:

Correct Answer:  Step 1: New-AzureSqlServer

Step 1: New-AzureSqlServer

Create a server.

Step 2: New-AzureRmSqlServerFirewallRule

New-AzureRmSqlServerFirewallRule creates a firewall rule for a SQL Database server.

Can be used to create a server firewall rule that allows access from the specified IP range.

Step 3: New-AzureRmSqlDatabase

Example: Create a database on a specified server

PS C:\>New-AzureRmSqlDatabase -ResourceGroupName “ResourceGroup01” -ServerName “Server01”

-DatabaseName “Database01

References: https://docs.microsoft.com/en-us/azure/sql-database/scripts/sql-database-create-and-configure-database-

powershell?toc=%2fpowershell%2fmodule%2ftoc.json

QUESTION 5

Note: This question is part of series of questions that present the same scenario. Each question in the series contains a

unique solution. Determine whether the solution meets the stated goals.

You develop a data ingestion process that will import data to a Microsoft Azure SQL Data Warehouse. The data to be

ingested resides in parquet files stored in an Azure Data Lake Gen 2 storage account.

You need to load the data from the Azure Data Lake Gen 2 storage account into the Azure SQL Data Warehouse.

Solution:

1.

Use Azure Data Factory to convert the parquet files to CSV files

2.

Create an external data source pointing to the Azure storage account

3.

Create an external file format and external table using the external data source

4.

Load the data using the INSERT…SELECT statement Does the solution meet the goal?

A. Yes

B. No

Correct Answer: B

There is no need to convert the parquet files to CSV files.

You load the data using the CREATE TABLE AS SELECT statement.

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-load-from-azure-data-lake-store

QUESTION 6

A company builds an application to allow developers to share and compare code. The conversations, code snippets,

and links shared by people in the application are stored in a Microsoft Azure SQL Database instance. The application

allows for searches of historical conversations and code snippets.

When users share code snippets, the code snippet is compared against previously share code snippets by using a

combination of Transact-SQL functions including SUBSTRING, FIRST_VALUE, and SQRT. If a match is found, a link to

the match is added to the conversation.

Customers report the following issues: Delays occur during live conversations A delay occurs before matching links

appear after code snippets are added to conversations

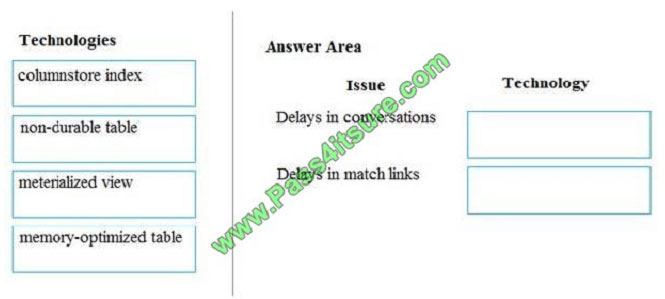

You need to resolve the performance issues.

Which technologies should you use? To answer, drag the appropriate technologies to the correct issues. Each

technology may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll

to view content.

NOTE: Each correct selection is worth one point.

Select and Place: Correct Answer:

Correct Answer:  Box 1: memory-optimized table

Box 1: memory-optimized table

In-Memory OLTP can provide great performance benefits for transaction processing, data ingestion, and transient data

scenarios.

Box 2: materialized view

To support efficient querying, a common solution is to generate, in advance, a view that materializes the data in a format

suited to the required results set. The Materialized View pattern describes generating prepopulated views of data in

environments where the source data isn\\’t in a suitable format for querying, where generating a suitable query is

difficult, or where query performance is poor due to the nature of the data or the data store.

These materialized views, which only contain data required by a query, allow applications to quickly obtain the

information they need. In addition to joining tables or combining data entities, materialized views can include the current

values of

calculated columns or data items, the results of combining values or executing transformations on the data items, and

values specified as part of the query. A materialized view can even be optimized for just a single query.

References:

https://docs.microsoft.com/en-us/azure/architecture/patterns/materialized-view

QUESTION 7

Note: This question is part of series of questions that present the same scenario. Each question in the series contains a

unique solution. Determine whether the solution meets the stated goals.

You develop data engineering solutions for a company.

A project requires the deployment of resources to Microsoft Azure for batch data processing on Azure HDInsight. Batch

processing will run daily and must:

Scale to minimize costs

Be monitored for cluster performance

You need to recommend a tool that will monitor clusters and provide information to suggest how to scale.

Solution: Monitor clusters by using Azure Log Analytics and HDInsight cluster management solutions.

Does the solution meet the goal?

A. Yes

B. No

Correct Answer: A

HDInsight provides cluster-specific management solutions that you can add for Azure Monitor logs. Management

solutions add functionality to Azure Monitor logs, providing additional data and analysis tools. These solutions collect

important performance metrics from your HDInsight clusters and provide the tools to search the metrics. These solutions

also provide visualizations and dashboards for most cluster types supported in HDInsight. By using the metrics that you

collect with the solution, you can create custom monitoring rules and alerts.

References: https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-hadoop-oms-log-analytics-tutorial

QUESTION 8

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains

a unique solution that might meet the stated goals. Some questions sets might have more than one correct solution,

while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not

appear in the review screen.

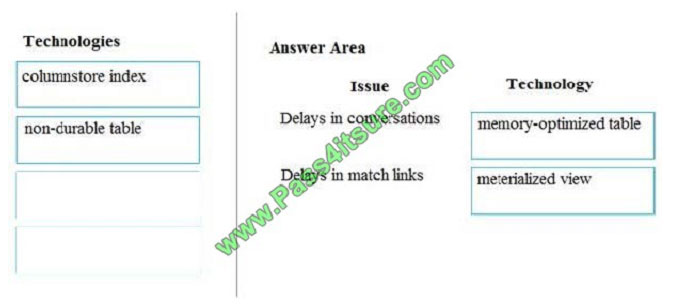

You need to configure data encryption for external applications.

Solution:

1.

Access the Always Encrypted Wizard in SQL Server Management Studio

2.

Select the column to be encrypted

3.

Set the encryption type to Randomized

4.

Configure the master key to use the Windows Certificate Store

5.

Validate configuration results and deploy the solution

Does the solution meet the goal?

A. Yes

B. No

Correct Answer: B

Use the Azure Key Vault, not the Windows Certificate Store, to store the master key.

Note: The Master Key Configuration page is where you set up your CMK (Column Master Key) and select the key store

provider where the CMK will be stored. Currently, you can store a CMK in the Windows certificate store, Azure Key

Vault, or a hardware security module (HSM). References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-always-encrypted-azure-key-vault

References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-always-encrypted-azure-key-vault

QUESTION 9

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains

a unique solution that might meet the stated goals. Some questions sets might have more than one correct solution,

while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not

appear in the review screen.

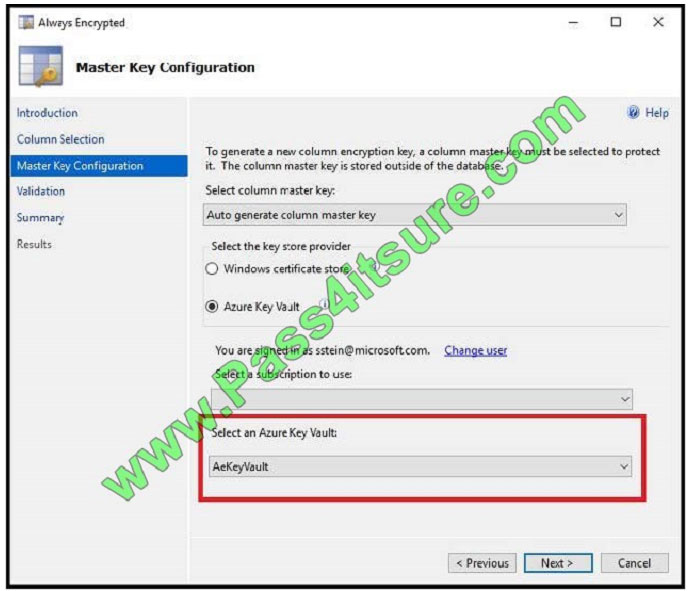

You need to implement diagnostic logging for Data Warehouse monitoring.

Which log should you use?

A. RequestSteps

B. DmsWorkers

C. SqlRequests

D. ExecRequests

Correct Answer: C

Scenario:

The Azure SQL Data Warehouse cache must be monitored when the database is being used.

References: https://docs.microsoft.com/en-us/sql/relational-databases/system-dynamic-management-views/sys-dm-pdw-

sql-requests-transact-sq

QUESTION 10

A company runs Microsoft Dynamics CRM with Microsoft SQL Server on-premises. SQL Server Integration Services

(SSIS) packages extract data from Dynamics CRM APIs, and load the data into a SQL Server data warehouse.

The datacenter is running out of capacity. Because of the network configuration, you must extract on premises data to

the cloud over https. You cannot open any additional ports. The solution must implement the least amount of effort.

You need to create the pipeline system.

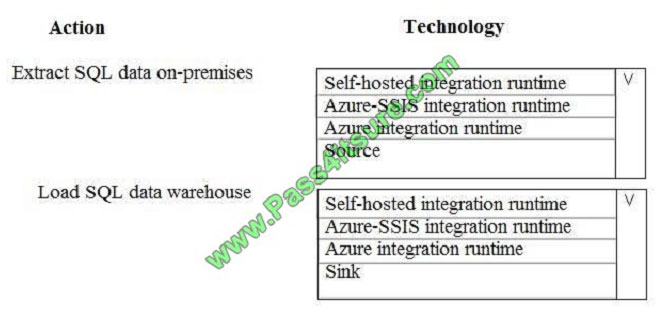

Which component should you use? To answer, select the appropriate technology in the dialog box in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area: Correct Answer:

Correct Answer:  Box 1: Source

Box 1: Source

For Copy activity, it requires source and sink linked services to define the direction of data flow.

Copying between a cloud data source and a data source in private network: if either source or sink linked service points

to a self-hosted IR, the copy activity is executed on that self-hosted Integration Runtime.

Box 2: Self-hosted integration runtime

A self-hosted integration runtime can run copy activities between a cloud data store and a data store in a private

network, and it can dispatch transform activities against compute resources in an on-premises network or an Azure

virtual

network. The installation of a self-hosted integration runtime needs on an on-premises machine or a virtual machine

(VM) inside a private network.

References:

https://docs.microsoft.com/en-us/azure/data-factory/create-self-hosted-integration-runtime

QUESTION 11

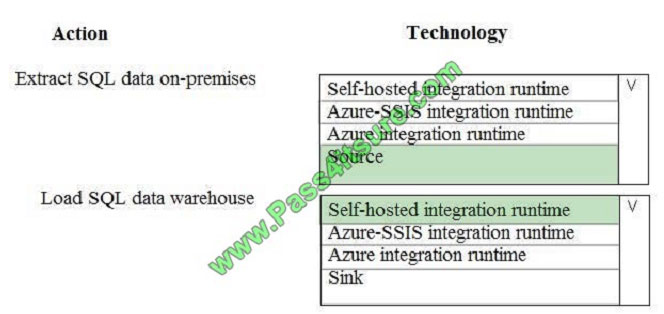

You need to set up Azure Data Factory pipelines to meet data movement requirements. Which integration runtime

should you use?

A. self-hosted integration runtime

B. Azure-SSIS Integration Runtime

C. .NET Common Language Runtime (CLR)

D. Azure integration runtime

Correct Answer: A

The following table describes the capabilities and network support for each of the integration runtime types: Scenario: The solution must support migrating databases that support external and internal application to Azure SQL

Scenario: The solution must support migrating databases that support external and internal application to Azure SQL

Database. The migrated databases will be supported by Azure Data Factory pipelines for the continued movement,

migration and updating of data both in the cloud and from local core business systems and repositories.

References: https://docs.microsoft.com/en-us/azure/data-factory/concepts-integration-runtime

QUESTION 12

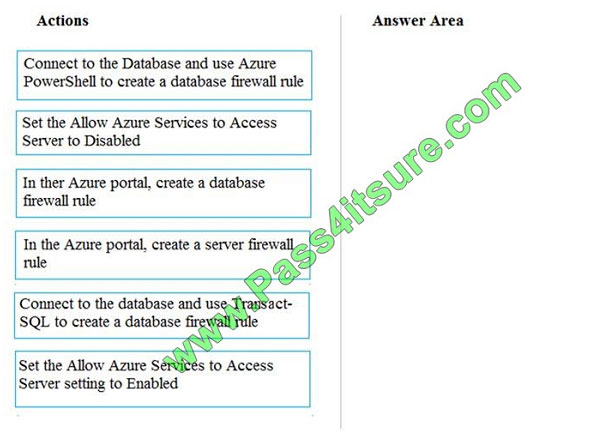

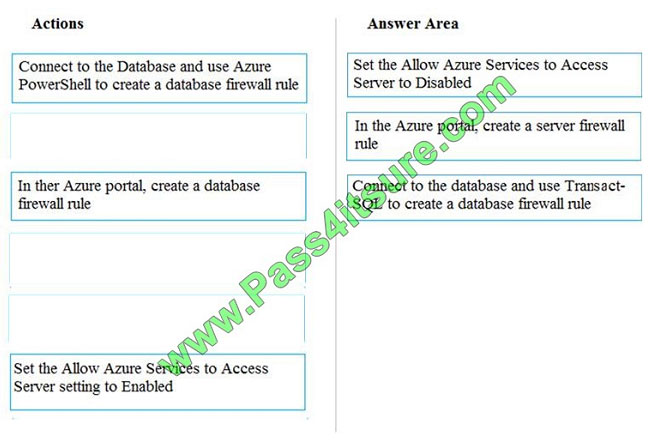

You need to set up access to Azure SQL Database for Tier 7 and Tier 8 partners.

Which three actions should you perform in sequence? To answer, move the appropriate three actions from the list of

actions to the answer area and arrange them in the correct order.

Select and Place: Correct Answer:

Correct Answer:  Tier 7 and 8 data access is constrained to single endpoints managed by partners for access

Tier 7 and 8 data access is constrained to single endpoints managed by partners for access

Step 1: Set the Allow Azure Services to Access Server setting to Disabled

Set Allow access to Azure services to OFF for the most secure configuration.

By default, access through the SQL Database firewall is enabled for all Azure services, under Allow access to Azure

services. Choose OFF to disable access for all Azure services.

Note: The firewall pane has an ON/OFF button that is labeled Allow access to Azure services. The ON setting allows

communications from all Azure IP addresses and all Azure subnets. These Azure IPs or subnets might not be owned

by

you. This ON setting is probably more open than you want your SQL Database to be. The virtual network rule feature

offers much finer granular control.

Step 2: In the Azure portal, create a server firewall rule

Set up SQL Database server firewall rules

Server-level IP firewall rules apply to all databases within the same SQL Database server.

To set up a server-level firewall rule:

In Azure portal, select SQL databases from the left-hand menu, and select your database on the SQL databases page.

On the Overview page, select Set server firewall. The Firewall settings page for the database server opens.

Step 3: Connect to the database and use Transact-SQL to create a database firewall rule

Database-level firewall rules can only be configured using Transact-SQL (T-SQL) statements, and only after you\\’ve

configured a server-level firewall rule.

To setup a database-level firewall rule:

Connect to the database, for example using SQL Server Management Studio.

In Object Explorer, right-click the database and select New Query.

In the query window, add this statement and modify the IP address to your public IP address:

EXECUTE sp_set_database_firewall_rule N\\’Example DB Rule\\’,\\’0.0.0.4\\’,\\’0.0.0.4\\’;

On the toolbar, select Execute to create the firewall rule.

References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-security-tutorial

QUESTION 13

You need to process and query ingested Tier 9 data.

Which two options should you use? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Azure Notification Hub

B. Transact-SQL statements

C. Azure Cache for Redis

D. Apache Kafka statements

E. Azure Event Grid

F. Azure Stream Analytics

Correct Answer: EF

Explanation:

Event Hubs provides a Kafka endpoint that can be used by your existing Kafka based applications as an alternative to

running your own Kafka cluster.

You can stream data into Kafka-enabled Event Hubs and process it with Azure Stream Analytics, in the following steps:

Create a Kafka enabled Event Hubs namespace.

Create a Kafka client that sends messages to the event hub.

Create a Stream Analytics job that copies data from the event hub into an Azure blob storage.

Scenario:![]() Tier 9 reporting must be moved to Event Hubs, queried, and persisted in the same Azure region as the company’s main

Tier 9 reporting must be moved to Event Hubs, queried, and persisted in the same Azure region as the company’s main

office

References: https://docs.microsoft.com/en-us/azure/event-hubs/event-hubs-kafka-stream-analytics